REINFORCEMENT LEARNING Learning control algorithms for power electronic systems: from zero to hero in minutes

Related Vendors

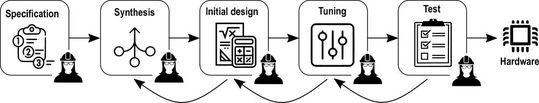

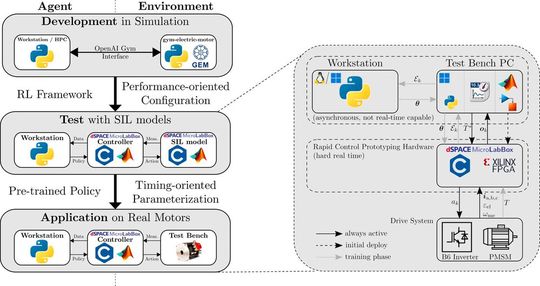

Reinforcement learning-based control design opens up the door to a new world of optimal controllers, which can be learned in minutes without human intervention. Traditional model-based control design techniques require significant development effort and, therefore, this article explores control design automation opportunities for power electronic systems.

The world of power electronics is heterogeneous and extensive: it includes AC-DC, DC-AC, AC-AC, DC-DC conversion for various load and source combinations, numbers of phases and covers a power range from milliwatts to megawatts. Many of those power electronic converts require proper control engineering to ensure a safe, reliable and efficient operation. Due to the heterogeneity of the field, this requires an enormous amount of engineering effort across all industrial applications, both in the control development and control tuning phase for specific converters.

Traditionally, linear feedback controllers, such as the classic PID controller, are used for this purpose.

However, this comparatively simple approach often proves to be difficult in practice, as non-linear system behavior, input action and state limitations as well as parasitic influences lead to significant deviations between the theoretical linear control design and the controlled system behavior in the real application. Especially for more complex converters, e.g. in the area of highly utilized drive systems or uninterruptible power supplies, corresponding control concepts have become more and more complex and include heuristic adaptation controllers with nested control loops, which can add up to a three-digit setting of tunable control parameters. Those historically evolved control concepts are cumbersome to improve as well as adapt to new converter hardware design, that is, they tie up enormous personnel resources. And yet these generally do not exhibit optimum control behavior due to their linear feedback approach.

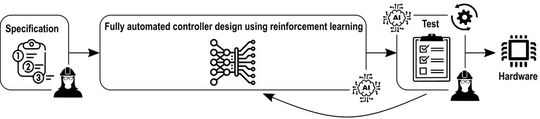

Model predictive control (MPC), on the other hand, is an interesting alternative because it interprets control as an optimization problem and can thus theoretically achieve optimal control performance. By considering the possibly non-linear system behavior, the system limitations and the future system behavior, MPC is completely different from classic feedback controllers and has been a major topic in academic research since roughly 40 years.

Although MPC could achieve tremendous merits in the academic world and has proven its versatility in almost every power electronic converter type, it could not achieve a breakthrough in industry. Besides very limited, niche applications (e.g., ABB’s megawatt drive series), heuristic feedback control concepts are still the backbone of industrial control systems in the active field. Main reasons for this observation are likely the increased complexity of MPC versus linear feedback control, which requires more development and tuning effort as well as engineers with a higher level of education. The resulting increase of development effort and time-to-market are crucial reasons contrast with the widespread use of MPC in the power electronics industry.

:quality(80)/p7i.vogel.de/wcms/e4/b4/e4b49f7e78e21d6abc9eb157ec7f635a/91275379.jpeg)

POWER ELECTRONICS

A brief history of power electronics and why it's important

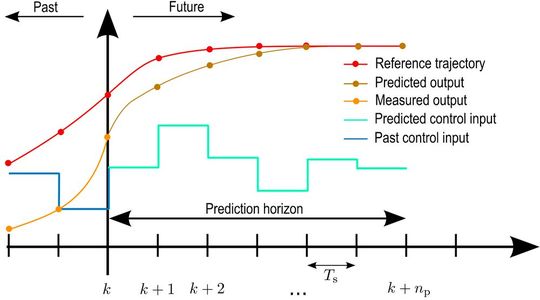

Fortunately, reinforcement learning (RL)-based control approaches offer an alternative way of achieving optimal control. Instead of solving a complex and model-based optimization problem in real time, RL learns optimal control actions from past data. Similar to human learning, RL evaluates past actions and adapts its policy such that favorable control actions, e.g., leading to better tracking performance or higher converter efficiency in steady state, are more likely in the future. To prevent critical errors, especially during the early training phase of this data-driven controller, an additional safe guard is deployed to override unsafe actions, which would likely lead to critical overcurrent or overvoltage situations.

In this way, an explicit control policy, typically encoded within an artificial neural network, is learned. Thanks to embedded hardware accelerators, like field-programmable gate arrays (FPGAs) or neural processing units (NPUs), those policies are executable in the microsecond range rendering them feasible for many power electronic applications. The learning process itself does not be executed in real time, but can be either outsourced to a prior simulation-based offline training or an edge-learning based training at test benches. Both approaches can be fully automated and do not require human intervention, which is favorable regarding development resources.

While RL-based control for power electronic applications is still in the early research stage, recent experimental proof-of-concept studies for different power electronic converter applications show that learning optimal control policies is possible within just a few minutes opening up the door to a completely new paradigm for control design (see example video below). These encouraging intermediate results motivate further research in this direction, particularly with a view to transfer to other power electronics applications and further methodological improvement, e.g. to reduce both training times and numerical effort on the embedded control devices. The prospect is that optimal control performance, as theoretically possible with MPC, can be achieved with minimal (human) development effort in heterogenous industrial power electronic applications.

Coffee machine vs. machine learning: who is quicker? Watch this video to find out:

References

- M. Schenke, B. Haucke-Korber and O. Wallscheid, "Finite-Set Direct Torque Control via Edge-Computing-Assisted Safe Reinforcement Learning for a Permanent-Magnet Synchronous Motor," in IEEE Transactions on Power Electronics, vol. 38, no. 11, pp. 13741-13756, Nov. 2023, doi: 10.1109/TPEL.2023.3303651

- D. Weber, M. Schenke and O. Wallscheid, "Safe Reinforcement Learning-Based Control in Power Electronic Systems," 2023 International Conference on Future Energy Solutions (FES)2023 International Conference on Future Energy Solutions (FES), Vaasa, Finland, 2023, doi: 10.1109/FES57669.2023.10182718

- S. Zhang, O. Wallscheid and M. Porrmann, "Machine Learning for the Control and Monitoring of Electric Machine Drives: Advances and Trends," IEEE Open Journal of Industry Applications, vol. 4, pp. 188-214, 2023, doi: 10.1109/OJIA.2023.3284717

PCIM Expo 2025: Become an exhibitor now

Let’s elevate your company’s global presence within the power electronics industry! Showcase your solutions, connect with the international community and shape the future at the PCIM Expo from 6 – 8 May 2025 in Nuremberg, Germany.

Learn more

(ID:50357429)

:quality(80)/p7i.vogel.de/wcms/a4/fd/a4fd7f7a395d6ecb4e79cfc558895b09/0129082772v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/71/fd/71fdcc22d9a9bd2f42985f692c4aefa2/0128924236v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/94/54/94548eaecd020681e558d563bc48ba1d/0128926221v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/29/99/2999bb9af245dd31f4c837c1d9359046/0128923137v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/67/62/676279913d77e1db48eb5cbe9be4c767/0128937895v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/0f/a2/0fa2b5bdc21e408fd73e637d226d5210/0128681532v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/4f/6f/4f6faf0ca6f748a2967d6b5bba7c88e1/0128682406v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/ad/52/ad52f7b5542eff15ba54ec354d31b50d/0128681536v4.jpeg)

:quality(80)/p7i.vogel.de/wcms/1e/9c/1e9c45d6fcf2fb48dc47756e4cb20174/0128931043v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/8b/42/8b4271e1bedea432ab03c83959e30431/0128818204v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/87/5a/875a8fa395c1eec9677e075fae7f5e8e/0128793884v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/2f/93/2f9364112e8c6ff38c26f9ba34d0f692/0128791306v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/3c/d1/3cd1cacbceb792ba63727199c61ca434/0127801860v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/5a/a0/5aa0436498af618297961fd54ab36cdf/0126290792v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/cb/30/cb30ebdca7fcaea281749cb396654eb3/0124716339v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/0b/b4/0bb4cdfa862043eac04c6a195e59b3e0/0124131782v2.jpeg)

:quality(80)/thumbor.vogel.de/XAIIUZ4chY9-Z3OQT-7HLdBmf3Q=/500x500/p7i.vogel.de/wcms/67/a2/67a2384824f36/wallscheid06.jpeg)

:fill(fff,0)/p7i.vogel.de/companies/61/dc/61dc0e8c2e420/dspace-logo-1000px-220107.png)

:fill(fff,0)/p7i.vogel.de/companies/68/00/6800eb0040fb9/cobalt-vertical-aligned-bold.png)

:fill(fff,0)/p7i.vogel.de/companies/66/9a/669a2816c84be/pcim-logo.png)

:quality(80)/p7i.vogel.de/wcms/85/2f/852fc0e2c3933c237dcaf96deea44dc8/0124723432v2.jpeg)

:quality(80)/p7i.vogel.de/wcms/a7/59/a75941f3a2e65f1a511f22a561f05d33/0125430906v2.jpeg)